Embody

Social VR Experience, January 2019

Client

Contributions

- Experience Strategy

- Technical Direction

- Prototyping

- Software Development

- Systems Design

Embody is a VR experience that draws on the body’s potential within the digital landscape, using movement to navigate through an immersive virtual experience. The project was featured as an installation in the Sundance Film Festival’s 2019 New Frontier exhibition, where participants were able to experience mindfulness and meditation in a space unlike any yoga studio. In collaboration with MAP Design Lab and lululemon’s Whitespace team, Glowbox provided the technical direction and software development to make this concept a reality.

Participants prepare for the experience at Sundance

Tree pose, one of the chapters within Embody

As a cooperative experience needing only headsets and yoga mats, two participants were guided by a virtual avatar through a series of body postures and soothing landscapes, flowing through an ever-changing world of flower petals and colorful fields. The users attempted to accurately mirror poses prompted by the avatar— affecting their environment more dramatically if their movements were successful. Without real-world obstacles, the participants were able to achieve a sense of relaxation, connecting with their bodies within the shifting landscapes.

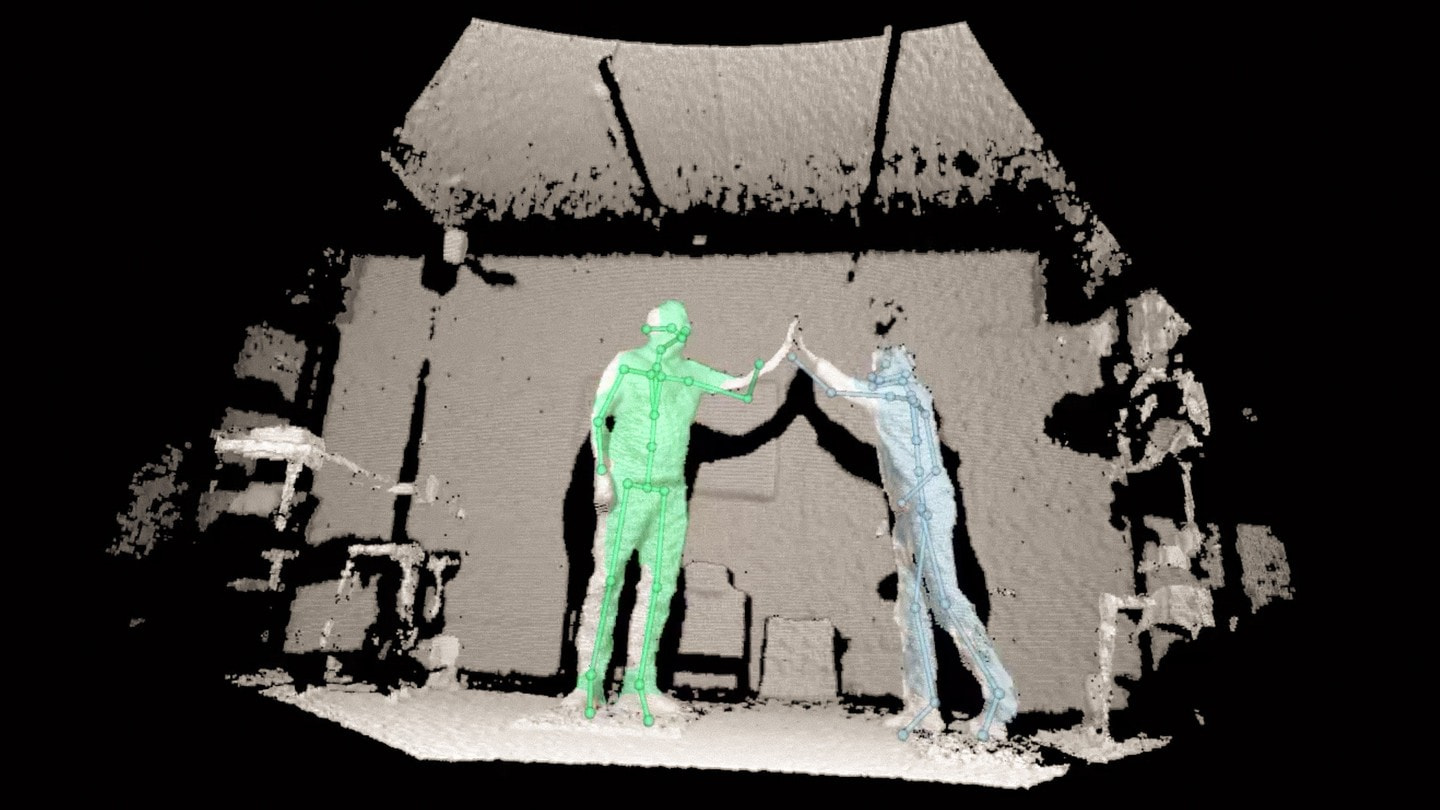

With aesthetic and user experience in mind, we were able to implement machine learning, computer vision, and directional audio to create a seamless calibrated system— allowing users to pilot their experience with only their bodies. By using pressure sensitive mats and skeletal tracking, participants could move fluidly without the need for controllers, trackers, or markers.

“It’s a critical moment culturally, with the advent of spatial computing and machine learning, for us to put the body back at the center of the potential relationship between technology and humans.”

So how did we do it? The VR experience is built in Unity— we made extensive use of its compute shaders, entity component system, post-processing stack, and timeline features to bring the design to life. Using a stereoscopic ZED camera to capture 3D data from 2D pose projections (Python + Tensorflow) and pressure mats to process data (Python + OpenCV), we could unify these sensing technologies in a calibrated coordinate system to create a smooth, responsive experience.

A virtual environment doesn’t need to eliminate the physical body. By pushing the boundaries of what’s possible within new media technologies, we can combine and expand on technical developments to create a natural sense of embodiment. Immersive platforms have incredible potential to expand on creative culture as it transforms— not just as entertainment, but as a way to benefit the body and education with purpose.

An antechamber leads into the VR space

Setting up the installation at Sundance

Diagnostic view of the body tracking system

Testing depth cameras with skeletal tracking

Credits

- Lead Artists

- Melissa Painter

- Thomas Wester

- Siân Slawson

- Key Collaborators

- Joey Verbeke

- Jordan Goldfarb

- Ben Purdy

- Peter Rubin

- Eric Adrian Marshall

- Producer

- Kate Wolf

- Concept Artist

- Yuehao Jiang

- Lead Technical Artist

- Candice Colbert

- Lead Developers

- Quin Kennedy

- Jeremy Rotsztain

..

- Movement Consultant

- Olivia Barry

- Executive Producers

- Tom Waller

- Mark Oleson

- Researchers

- Sian Allen

- Kate Henderson

- Carolyn Derynck

- Production Manager

- Brandon Jung

- In Partnership With

- MAP Design Lab

- lululemon Whitespace

- In Partnership With

- Microsoft

Tools & Technologies

- Unity

- TensorFlow

- OpenCV

- Windows Mixed Reality